使用 TensorFlow 來做簡單的手寫數字辨識

前言

相信大家都知道 TensorFlow 是可以用來建立跟訓練機器學習的模型,今天我們就來跑一個最簡單的 Neural Network,來辨識手寫數字,讓他吐出結果。有了這個程式之後,之後就可以再銜接其他的工具,例如讓機器人裝一個 camera,讀到 camera 的影像之後可以辨識數字,這樣就可以透過數字來對機器人下指令。或是可以再抽換辨識手寫數字的 node,改成辨識物體之類的。

接下來就讓我們開始吧。

TensorFlow 辨識手寫數字

首先,我們可以直接參考現成的程式碼,裡面兜出了一個 2 層的 Fully Connected Neural Network(也稱作 Multilayer Perceptron),裡面原本就有滿清楚的註解,讓大家易於理解:

1 | """ Neural Network. |

執行這個程式之後,你應該會看到以下的結果,表示功能正常,可以拿來辨識手寫數字的 dataset:

1 | ros@ros-K401UB:~/code/standalone/tensorflow$ python3.4 simple_nn.py |

但是,上面這個範例跑起來有點空虛,因為只是跑了一個 dataset,但我們是希望用來辨識一張圖片。

儲存可以辨識手寫數字的 Model

雖然要訓練這個範例很簡單,但我們不希望每次啟動程式時都重新訓練一次,所以我們希望可以將訓練完的 model 儲存下來,我們主要可以參考這個範例程式碼,然後把儲存 model 需要用到的幾個函式放到我們上面的範例中:

1 | """ Neural Network. |

把這個程式跑起來之後,你應該可以看到以下的輸出:

1 | ros@ros-K401UB:~/code/standalone/tensorflow$ python3.4 simple_nn_store.py |

將辨識手寫數字的 Model 變成可以吃一張 28x28 的影像並輸出答案

上面的兩步,我們已經把基本範例用起來,不過他還不太直覺,因為我們是希望讓手寫辨識的 node 可以吃進一張影像,然後吐出結果,所以在這一步我們要改寫一下。

一步一步來,首先我們可以將 training 的地方改成直接讀取 train 好的 model,然後把計算 accuracy 的地方改成直接輸出辨識的結果:

1 | from __future__ import print_function |

跑出來之後,你應該會看到下列結果:

1 | ros@ros-K401UB:~/code/standalone/tensorflow$ python3.4 simple_nn_srv.py |

然後,我們可以將他改成只吃一張影像,並輸出這張影像的辨識結果:

1 | """ Neural Network. |

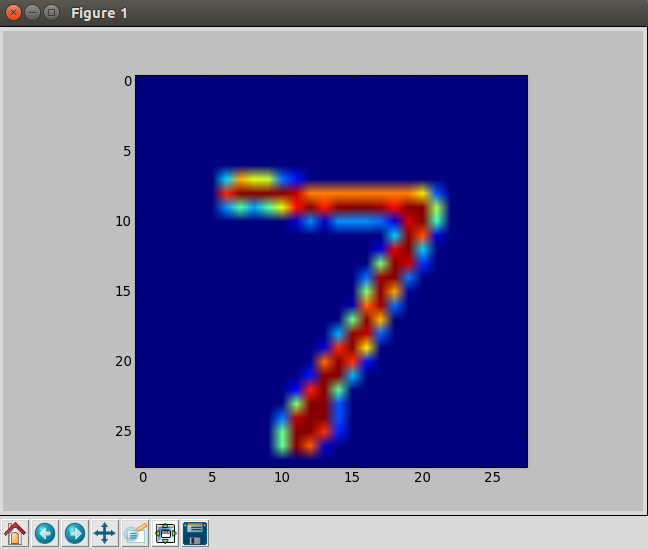

跑起來之後,你應該會先看到這個圖片的輸出:

然後會看到下列的命令列輸出:

1 | ros@ros-K401UB:~/code/standalone/tensorflow$ python3.4 simple_nn_srv.py |

可以看到自己的 model 成功辨識數字了!

總結

今天我們一起使用 TensorFlow 了一個可以辨識手寫數字的程式,其實這個範例可以用來做很多事情,例如你可以將辨識手寫數字的程式跟 ROS 串起來,變成可以辨識手寫數字的 node(只需要再串接 ROS),然後建立一個 service ,就可以讓其他人拿圖片來跟這個 node 要求辨識結果。或是將 node 裡面的功能和訊息格式修改一下,就可以做到物體辨識。之後有機會再一起來實作。

關於作者:

@pojenlai 演算法工程師,對機器人跟電腦視覺有少許研究,最近在學習看清事物的本質與改進自己的觀念

留言討論